robots.txt files can be helpful to let crawlers know what to do with your redirects.

You may specify a robots.txt configuration on team level or hostname level. When defined on team level, all hostnames will automatically inherit that setting.

This feature is available from the Basic plan and up.

No robots.txt (default)

No file under the "robots.txt" path will be served. Crawlers will keep crawling. Generally, serving no specific robots.txt is the same as "allowing all".

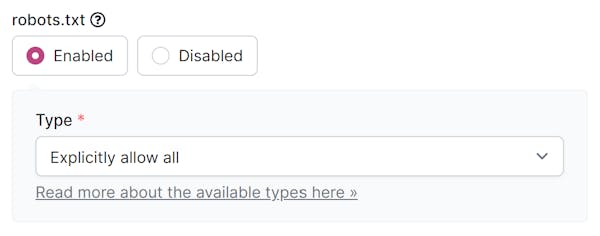

Explicitly allow all

We define a robots.txt file with the contents below. This will have the same effect as "no robots.txt" (default option), only more explicit that crawling is allowed.

User-agent: *

Disallow:

Disallow bad bots

We define a robots.txt file that disallows most known "bad bots". This can help decrease the number of hits on your account, as (fewer) bad bots stop crawling.

The list used is ported from here. Example file: https://redirect.pizza/robots.txt

Disallow all

We will serve a robots.txt file with the contents below. This will let the crawlers know that no crawling is allowed.

User-agent: *

Disallow: /

Custom

For custom requirements, you may specify a custom robots.txt file. A Dedicated IP is therefore required, as this custom file will be served from our edge network. Contact sales for more info.

Closing

Please note that the robots.txt file is a guidance given to crawlers. Crawlers can determine themselves to honor the defined settings. Most bots/crawlers do, but some bad bots may still choose to ignore these settings.

Please consult your SEO expert to determine which option is the best fit for you.